Core ML Explained: Apple's Machine Learning Framework

This articles will help you to assess and judge the main features of More ML and how you can leverage machine learning in your apps.

Let’s have a look at Core ML, Apple’s machine learning framework.

It is the foundational framework built to provide optimized performance through leveraging CPU, GPU and neural engines with minimal memory and power consumption. The Core ML APIs can be used across Apple's platforms and can supercharge apps with intelligent abilities.

Here is a brief timeline of the evolution of Core ML:

- Core ML was introduced in June 2017 during the Worldwide Developer Conference (WWDC) and emphasized user privacy by focussing on on-device machine learning over other cloud-based solutions from the competition. In December 2017 Google released a toolchain to convert TensorFlow models to Core ML file formats.

- In 2018 Apple released Core ML 2 at WWDC, improving model sizes, speed and most importantly the ability to create custom Core ML models.

- Core ML 3 was released in 2019 and added support for on-device machine learning model training as well as the Create ML desktop app to support custom model training with a GUI for even lower threshold to enter the world of creating custom machine learning models.

Now, let's have a closer look at the features of Core ML.

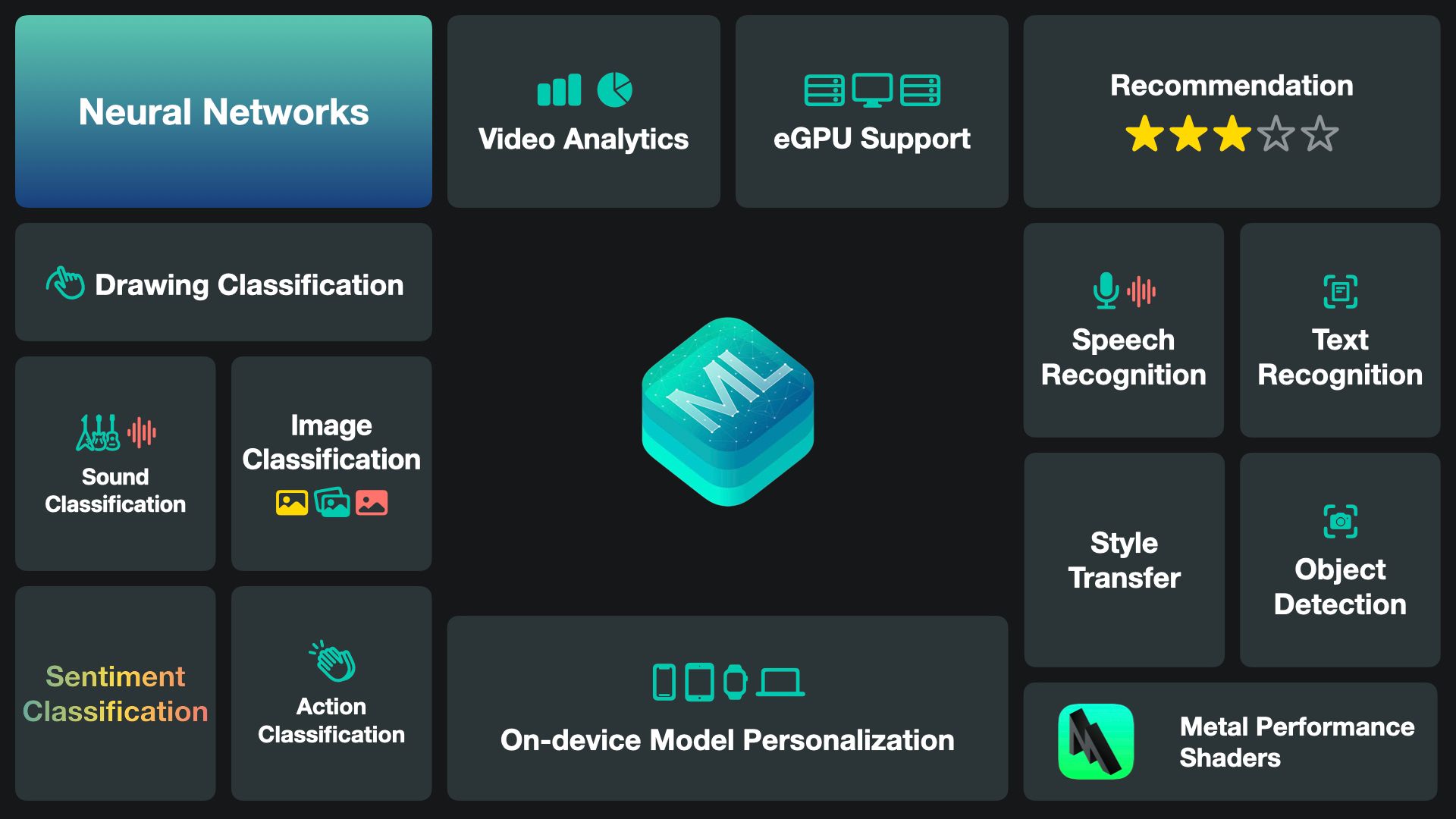

Core ML Features

Core ML is really powerful and it is optimized for on-device performance and a broad variety of model types, especially with the latest Apple hardware and Apple Silicon. You can use it to make your applications smarter, enabling new experiences for your apps running on iPhone, iPad, Apple Watch or Mac.

Core ML can use models to classify images and sounds, or even actions and drawings. It can work with audio or text, making use of neural networks, external GPUs and the powerful Metal Performance Shaders using the Metal framework.

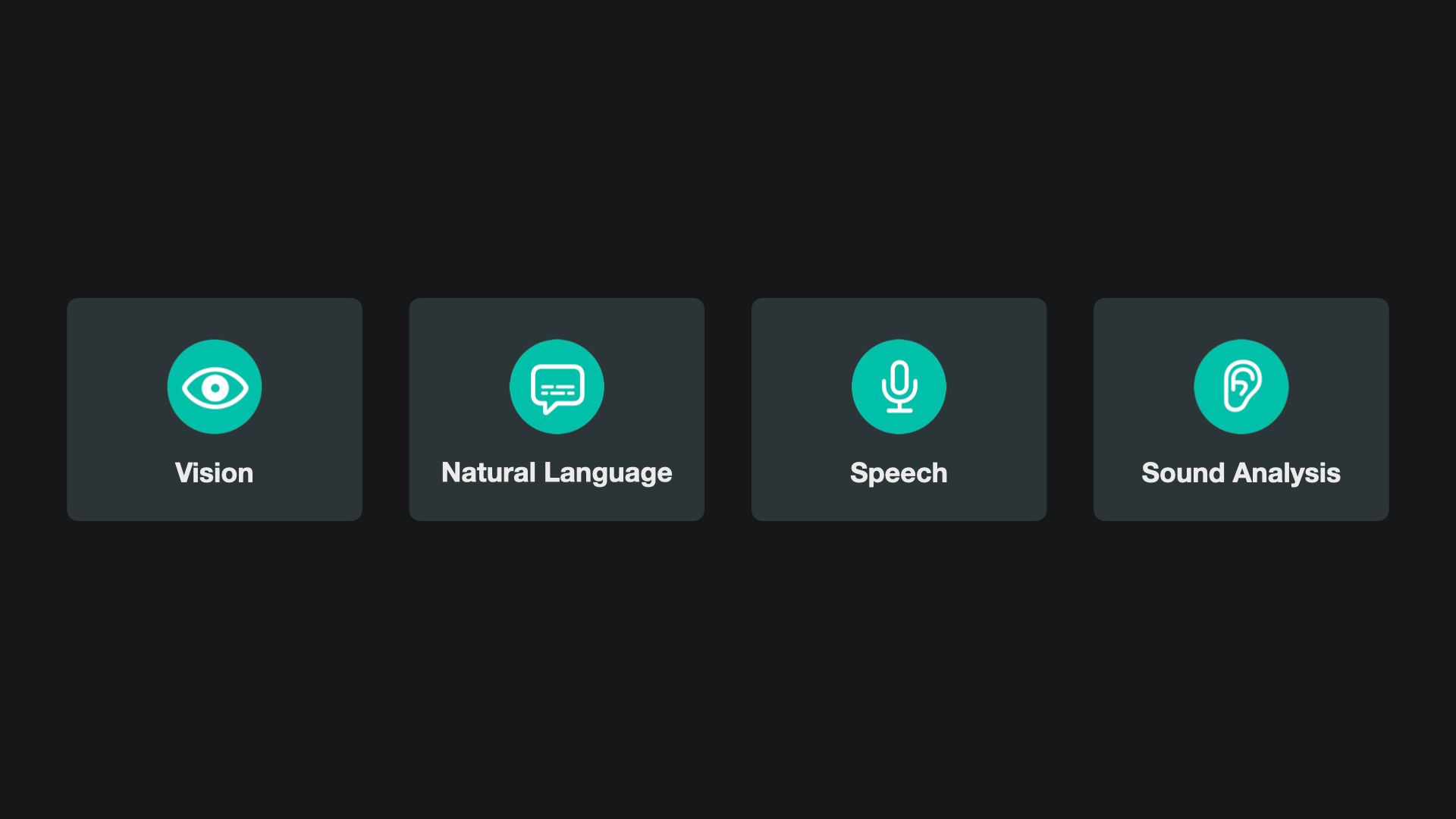

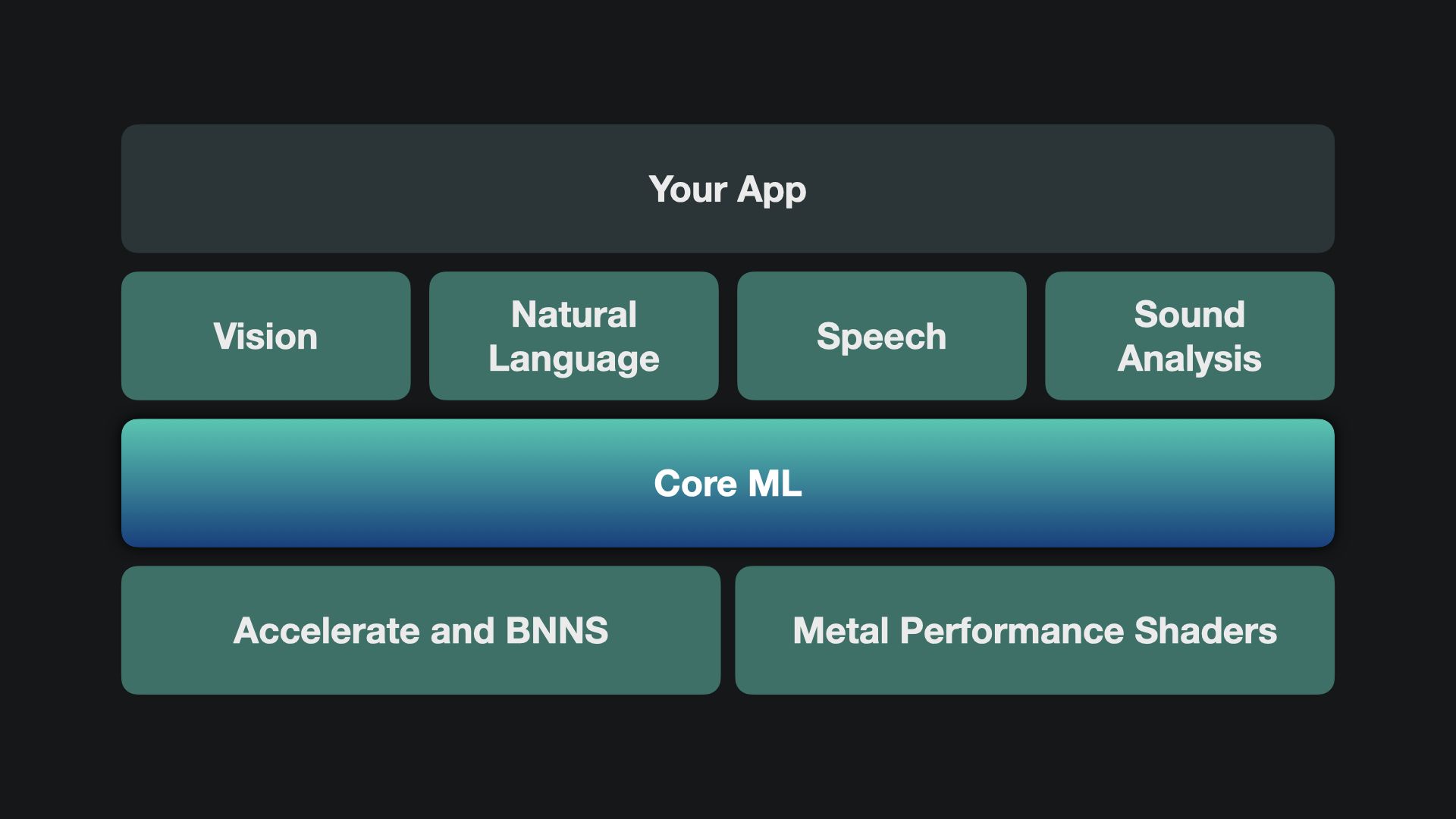

There are dedicated Machine Learning APIs that will allow you to leverage these capabilities with just few lines of code:

- With the Vision Framework you can build features to process and analyze images and videos using computer vision technologies, like image classification, object detection and action classification.

- With the Natural Language Framework you can analyze natural language text, segmenting it into paragraphs, sentences or words, and tag information about those segments.

- With the Speech Framework you can take advantage of speech recognition on live or prerecorded audio for a variety of languages.

- With the Sound Analysis Framework you can analyze audio and use it to classify sounds, like traffic noise or singing birds.

With these top level APIs, you can leverage the underlying Core ML capabilities and supercharge your apps with smart features that where unthinkable on-device just few years go. But if your needs don't fit the use cases covered by these frameworks you can use Core ML features directly. On a lower level you can also leverage Accelerate or Metal Performance Shaders for best in class performance.

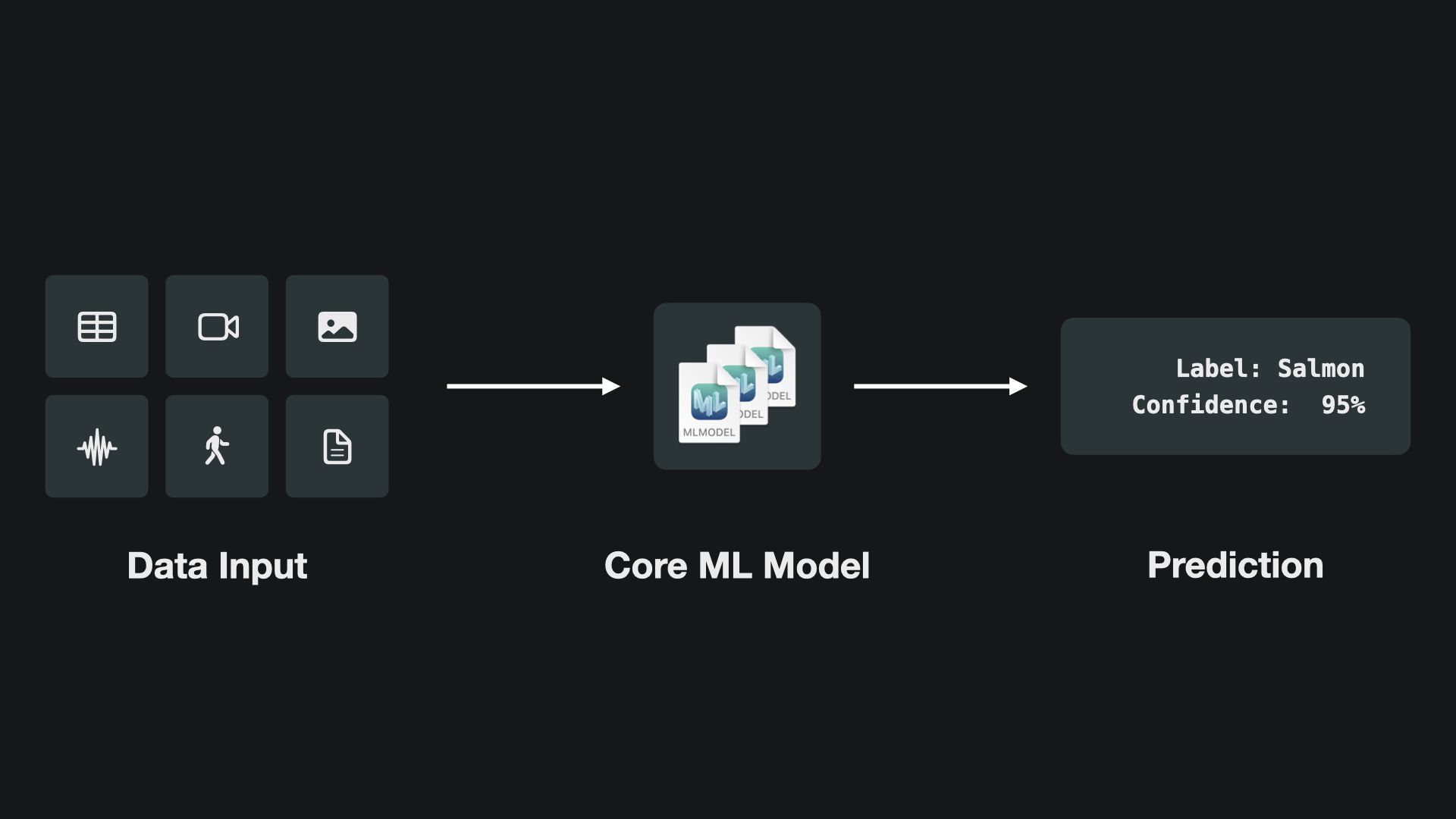

How does Core ML work?

The process is quite simple. Your application will provide some sort of data input to Core ML. It can be a text, images or a video feed for example. Core ML will run the data input through the model to execute its trained algorithm. It will then return the inferred labels and their confidence as your predictions. This process is called Inference. Depending on the type and quality of the model, inferences should return predictions with high confidence for the use case you want to address.

You are already using many apps powered by Core ML in a number of different ways. Let’s have a look at some examples.

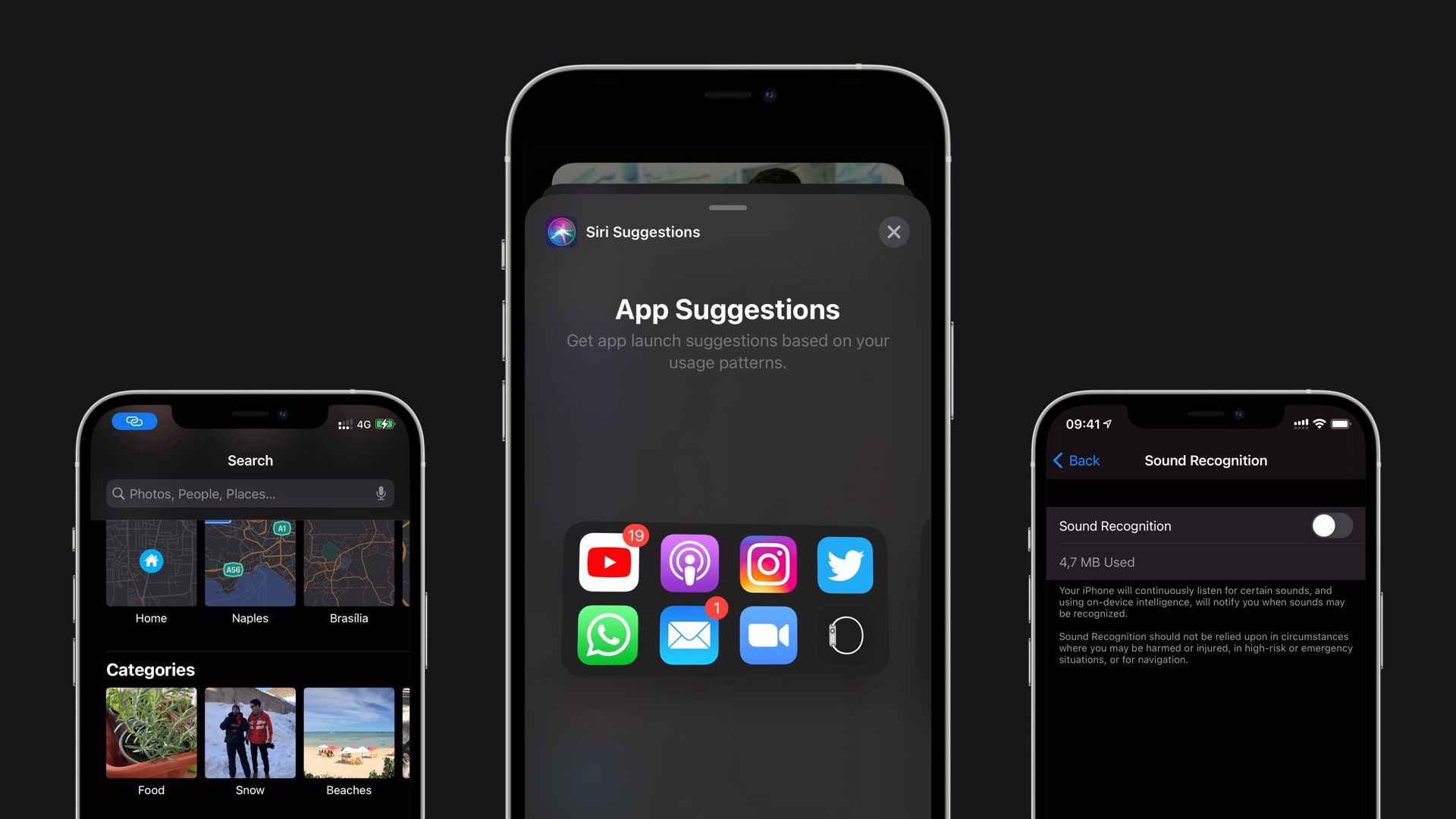

Let’s start with an app you might use every day on your iPhone. In the Photos app you can leverage the search features to reveal some of the amazing Core ML capabilities. Beyond face detection, the app uses image classification to automatically detect people in your photos. Based on the images in your library, Photos automatically creates categories, such as food, snow or sunsets. It can even identify the content of your shots, for example it detects cats or dogs. All of this is happening on-device, keeping your privacy intact.

At WWDC 2020 Sound Recognition was introduced as an Accessibility feature. It identifies a broad variety of sounds, such as alarms, animals or your door bell ringing. It uses sound classification and was trained to detect specific sounds when turned on. So if the doorbell rings, you can get a notification on your iPhone.

And then there is Siri of course, Apple’s smart assistant that is providing you with all kinds of suggestions based on how you use your phone, like on the widget App Suggestions, available on iOS 14. Again, all of this is done leveraging on-device machine learning capabilities.

Using, Converting & Creating Models

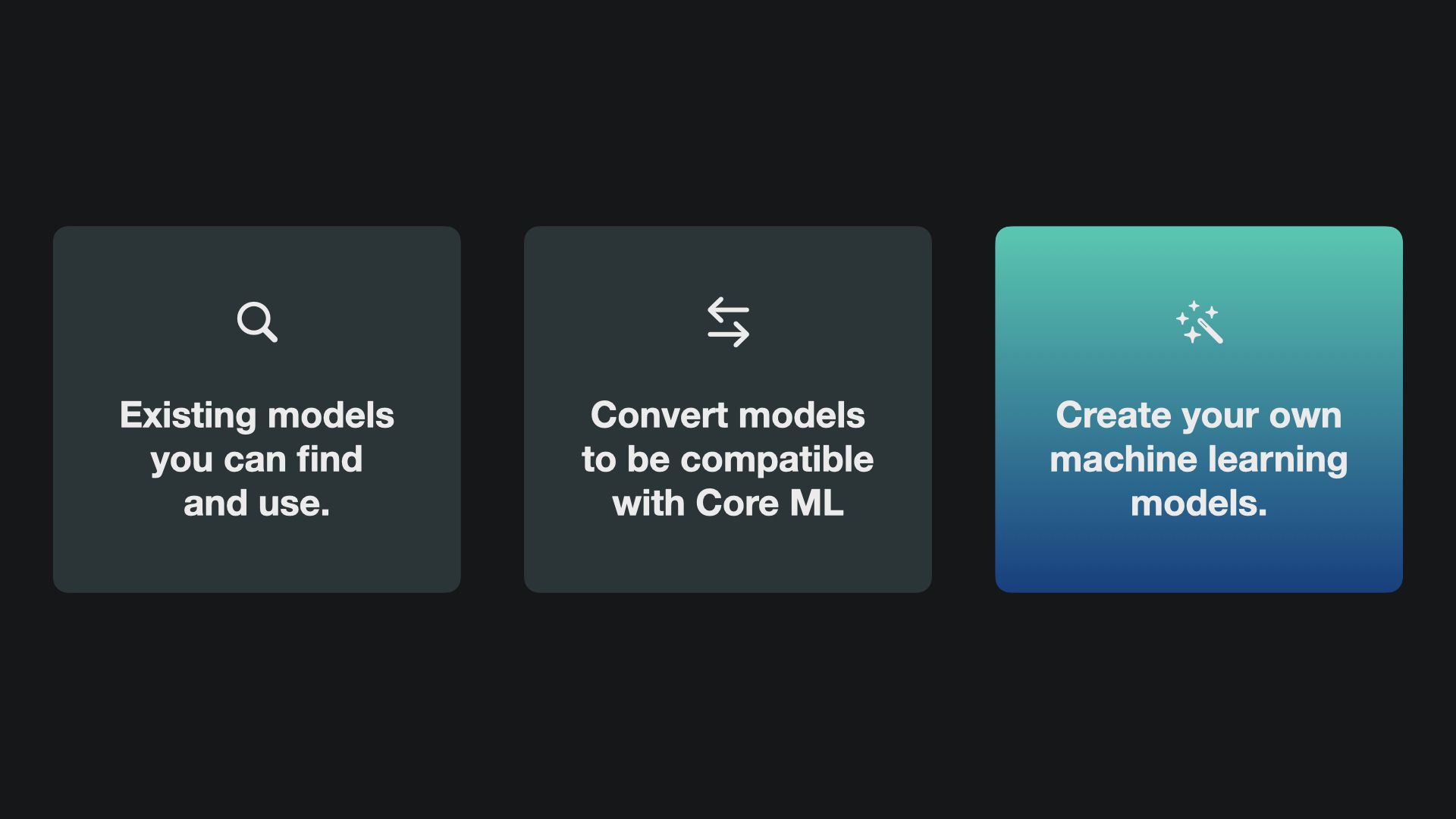

Core ML is a powerful toolchain to supercharge your apps with smart features and the beauty is, you don’t have to do all of this on your own. There are existing models out there ready for you to use directly in Core ML. You can also convert compatible models from other machine learning toolchains. And you can of course create your own custom machine learning models.

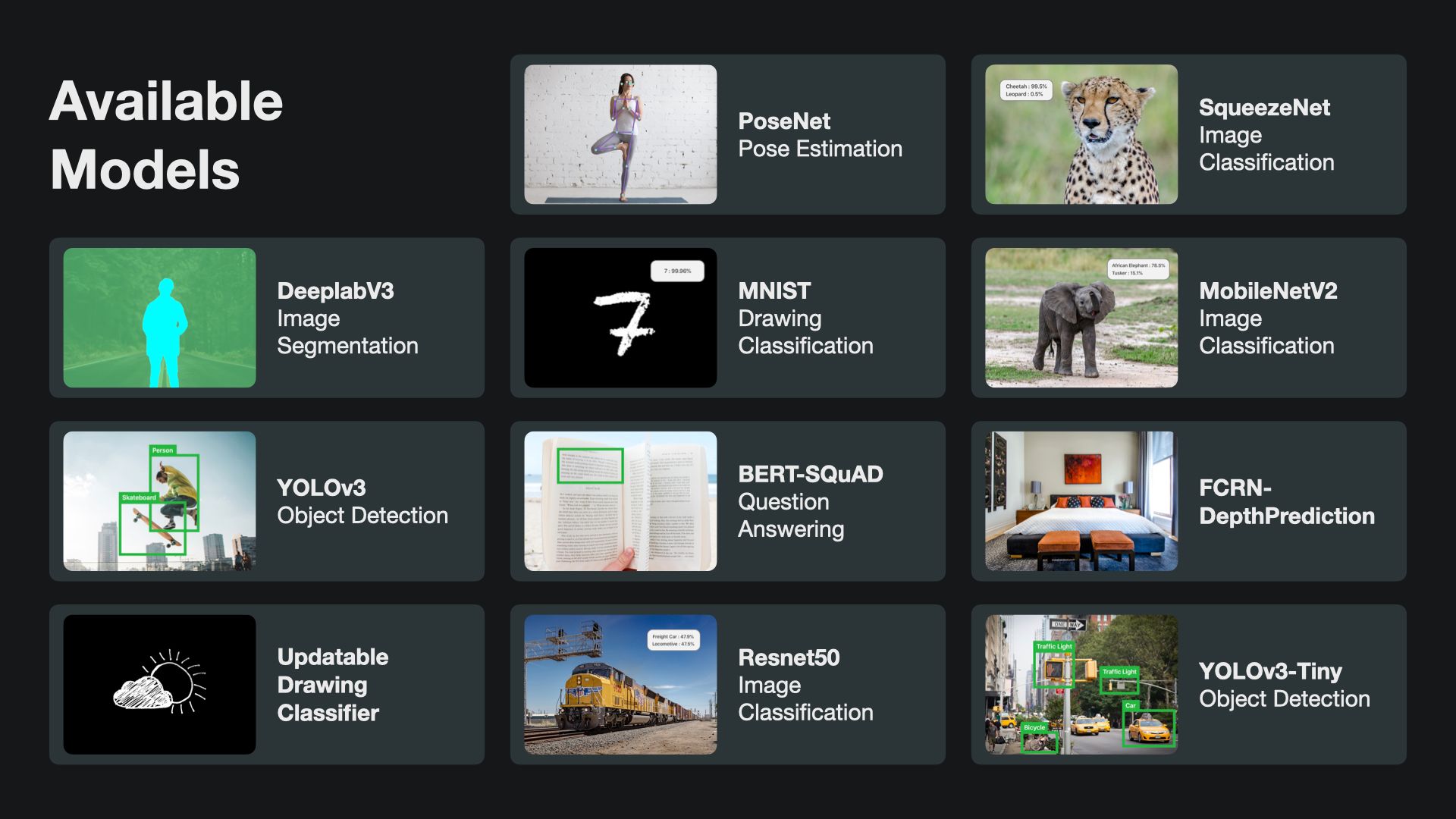

There are many preconfigured machine learning models from the research community designed for Core ML. They are available directly from the Apple Website. There are models for image classification, object detection, pose detection, drawing classification, text analysis and many others. Beyond that there are many more to be found online.

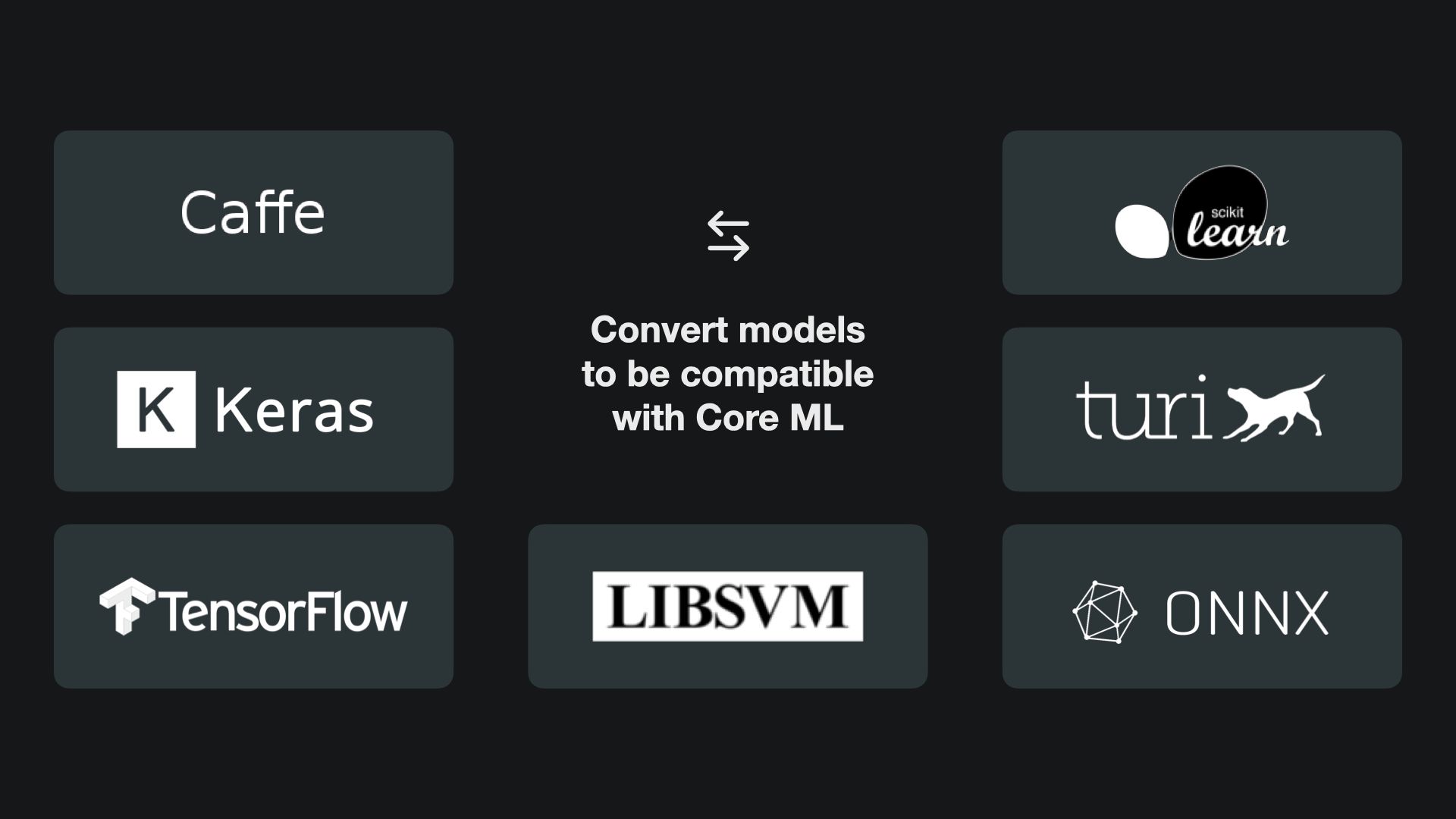

If your use case is not covered by one of the existing models provided by Apple, you can convert models from other toolchains like TensorFlow, Turi and many others. This provides you access to a literally unnumbered amount of machine learning models that are out there in the developer community.

If you cannot find anything that fits your use case, your can create your own custom machine learning models with Create ML. You can build and train machine learning models on your Mac, without dealing with the complexity of model training.

If you are curious, consider our article Create ML Explained: Apple's Toolchain to Build and Train Machine Learning Models or tutorials on how to create custom models for your apps.

This article is part of a series of articles derived from the presentation Creating Machine Learning Models with Create ML presented as a one time event at the Swift Heroes 2021 Digital Conference on April 16th, 2021.

Where to go next?

If you are interested into knowing more about Core ML, how to use machine learning models in your development projects or how to create custom models you can check our other articles:

- Create ML Explained: Apple's Toolchain to Build and Train Machine Learning Models

- Creating annotated data sets with IBM Cloud Annotations

- Creating an Object Detection Machine Learning Model with Create ML

- Using an Object Detection Machine Learning Model in Swift Playgrounds

You want to know more? There is more to see...

Recommended Content provided by Apple:

For a deeper dive on the topic of Core ML and Apple's machine learning technologies, you can watch the videos released by Apple on WWDC:

- WWDC 2017 - Introducing Core ML

- WWDC 2019 - Core ML 3 Framework

- WWDC 2019 - What's New in Machine Learning

Other Resources:

If you are interested into knowing more about Core ML and how to use machine learning models in your apps, you can go through these resources:

- To understand how to optimize the user interface design and user experience of your app for an immerse machine learning experience, explore the dedicated sections within the Human Interface Guidelines.

- To understand how to convert third-party machine learning models to Core ML formats uinsg the coremltools Python package, study the coremltools documention.

- To learn about the updates to Core ML 3 and some advanced tips on how to use the machine learning frameworks in your apps, read What’s new in Core ML 3 and Advanced Tips for Core ML.