Using HTTP Live Streaming (HLS) for live and on‐demand audio and video

This article introduces most relevant aspects of HTTP Live Streaming (HLS) and powerful toolchains provided by Apple for live and on-demand media.

On-demand content is certainly on the rise and whether it is just audio or even video, streaming technologies are at the core of any modern multimedia application or service. Among the many different technical formats to choose from, HTTP Live Streaming (also known as HLS) is a popular format for HTTP-based adaptive bitrate streaming. It was developed by Apple and released in 2009. As of 2017, the 7th version of the protocol is described as the RFC 8216 standard.

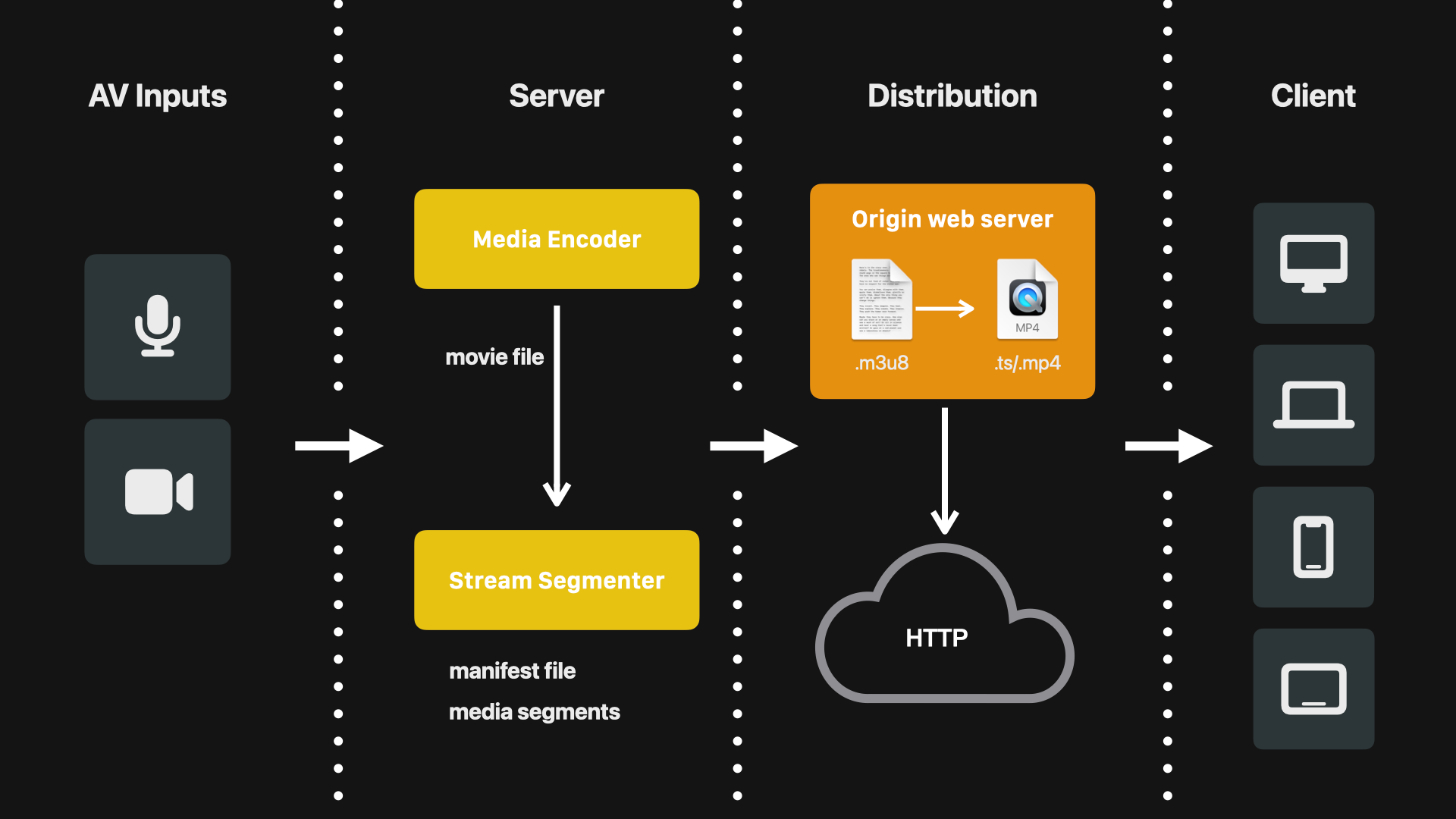

Similar to other standards, it works by breaking media streams down into small media segments in form of a sequence of small HTTP-based file downloads. As such, it uses an extended M3U playlist file to direct the client toward the media segments. Video files can be codified using H.264 format and audio is supported with AAC, MP3, AC-3 or EC-3. The stream is divided in segments of equal length and can also accommodate live event streaming by continuously adding new media segments to the playlist file.

On of the various beauties of this approach is that any media can be served through standard HTTP connections, such as widely adopted HTTP-based content delivery networks, and allows firewalls and proxy servers to be traversed. The client downloads the playlist file and thus can access the media segment files in sequence as directed through the playlist manifest and assembles the media file as it is played to the user.

Now let's have a look at Apple's HLS resources and how it works in detail.

Getting to know HTTP Live Streaming (HLS)

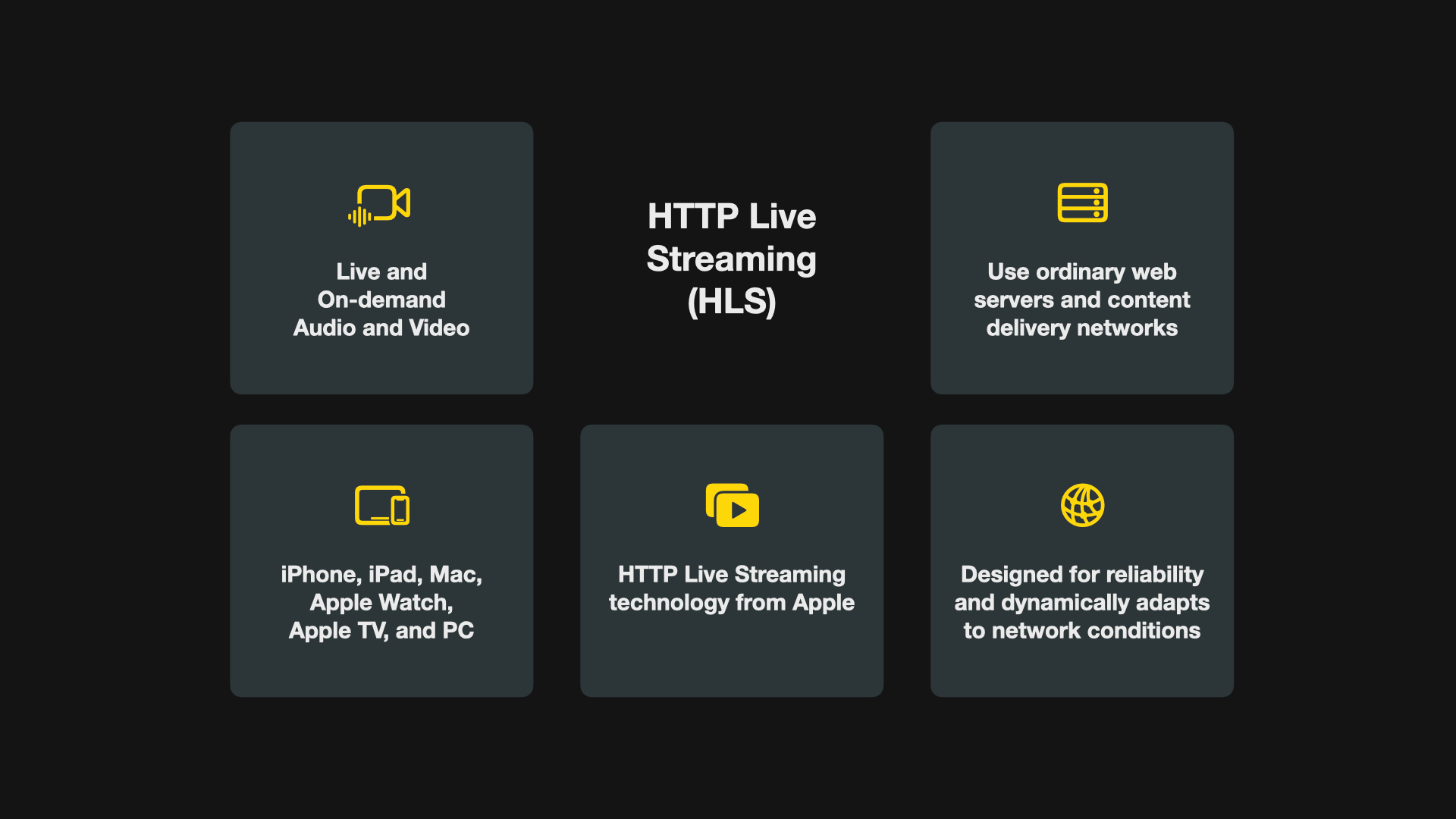

Apple provides extensive resources about HLS and promotes the technology for live and on-demand audio and video to iPhone, iPad, Mac, AppleTV, and even Apple Watch as well as PC. HLS is advertised as being designed for reliability and being able to dynamically adapt to network conditions by optimizing playback.

Apple provides toolchains for working with HLS, such as the Media Streaming Validator Tool, as well as streaming examples with .m3u8 playlist and .ts media segment files. In 2019, Apple introduced Low-Latency HLS, promoting latencies below 2 seconds over public networks, while remaining compatible to existing clients.

Audio and video can be sent over HTTP from any ordinary web server and content delivery networks. Apple provides support for HLS with multiple frameworks, for example, AVKit, AVFoundation as well as WebKit.

HLS support following features:

- live broadcast & on-demand content

- multiple streams at various bitrates

- intelligent switching of streams based on network conditions

- media encryption and user authentication

All main features of HLS are implemented through the configuration of the manifest playlist files. Let's have a look at some of the different options.

VOD HLS Playlist files

An HLS stream is addressed through an HLS playlist .m3u8 file, which is a manifest that links to chunks of media files that are played in sequence. For example, a playlist file for video-on-demand content could look like this:

#EXTM3U

#EXT-X-PLAYLIST-TYPE:VOD

#EXT-X-TARGETDURATION:10

#EXT-X-VERSION:4

#EXT-X-MEDIA-SEQUENCE:0

#EXTINF:10.0,

fileSequenceA.ts

#EXTINF:10.0,

fileSequenceB.ts

#EXTINF:10.0,

fileSequenceC.ts

#EXTINF:9.0,

fileSequenceD.ts

#EXT-X-ENDLISTThe manifest provided some information that applies to the entire playlist. For example, the EXT-X-PLAYLIST-TYPE indicates whether the playlist is mutable. It can be set to EVENT or VOD. In the case of EVENT, the server may append more files to the sequence as they become available. In the case of VOD, the playlist file cannot change.

Other metadata indicates the maximum duration of any media file inside the playlist (EXT-X-TARGETDURATION), the compatibility version of the playlist (EXT-X-VERSION), or the sequence number of the first URL in the playlist (EXT-X-MEDIA-SEQUENCE). The playlist can also have a compatibility version (EXT-X-VERSION) which will enforce that all media and the server must comply with the requirements of that protocol version. The EXTINF marker describes the following media file identified by its URL with a float value for the duration of the media segment in seconds. Finally, at the end of the playlist, EXT-X-ENDLIST closes the file. The individual media segments are loaded in sequence, making the playback more reliable.

EVENT HLS Playlist files

An event playlist is indicated with the value of EVENT for the EXT-X-PLAYLIST-TYPE and it doesn't have an EXT-X-ENDLIST tag until it's completed. Then the ending tag is appended. Also, in this type of playlist, no elements can be deleted. An example playlist looks like this:

#EXTM3U

#EXT-X-PLAYLIST-TYPE:EVENT

#EXT-X-TARGETDURATION:10

#EXT-X-VERSION:4

#EXT-X-MEDIA-SEQUENCE:0

#EXTINF:10.0,

fileSequence0.ts

#EXTINF:10.0,

fileSequence1.ts

#EXTINF:10.0,

fileSequence2.ts

#EXTINF:10.0,

fileSequence3.ts

#EXTINF:10.0,

fileSequence4.ts

// List of files between 4 and 120 go here.

#EXTINF:10.0,

fileSequence120.ts

#EXTINF:10.0,

fileSequence121.ts

#EXT-X-ENDLISTLive HLS Playlist files

For a live session, the playlist file is updated periodically by removing media while adding the files that have been created and are to be made available. For that reason, the EXT-X-ENDLIST tag doesn't exist in such a playlist. An example playlist looks like this:

#EXTM3U

#EXT-X-TARGETDURATION:10

#EXT-X-VERSION:4

#EXT-X-MEDIA-SEQUENCE:1

#EXTINF:10.0,

fileSequence1.ts

#EXTINF:10.0,

fileSequence2.ts

#EXTINF:10.0,

fileSequence3.ts

#EXTINF:10.0,

fileSequence4.ts

#EXTINF:10.0,

fileSequence5.tsThe EXT-X-MEDIA-SEQUENCE tag indicates the sequence number of the first URL within the playlist. In the case of removing media segments from the playlist, the EXT-X-MEDIA-SEQUENCE has to be increased by 1 for every removed segment. This mechanism is called Sliding Window Construction. So an updated playlist after some time has passed, may look like this:

EXTM3U

#EXT-X-TARGETDURATION:10

#EXT-X-VERSION:4

#EXT-X-MEDIA-SEQUENCE:2

#EXTINF:10.0,

fileSequence2.ts

#EXTINF:10.0,

fileSequence3.ts

#EXTINF:10.00,

fileSequence4.ts

#EXTINF:10.00,

fileSequence5.ts

#EXTINF:10.0,

fileSequence6.tsPrimary HLS Playlist files

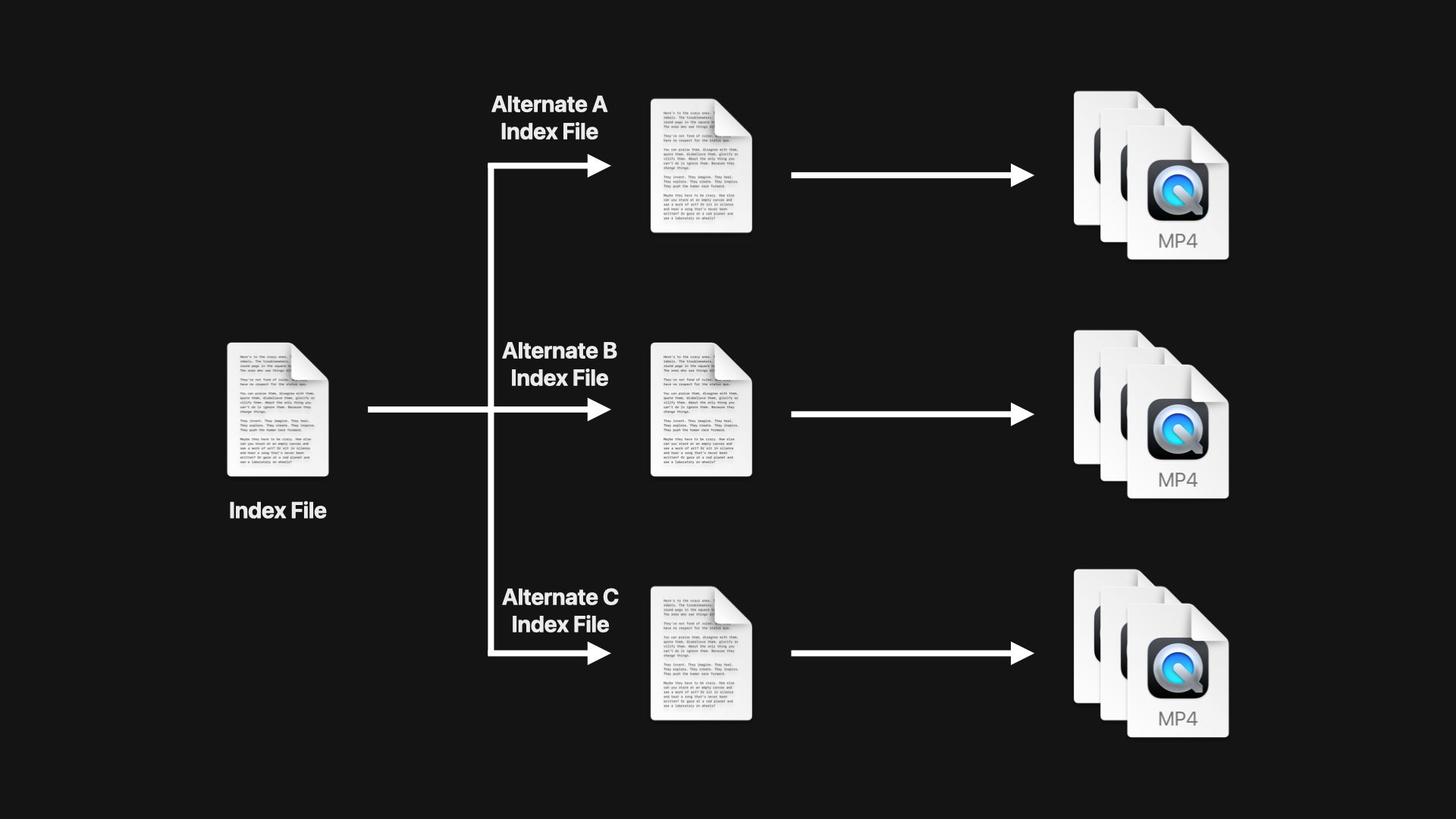

The technology also allows the creation of a primary playlist that links to different playlists, for example, to have multiple streams with different resolutions. HLS allows the playlist to be determined based on the network conditions.

An example playlist looks like this:

#EXTM3U

#EXT-X-VERSION:4

#EXT-X-INDEPENDENT-SEGMENTS

#EXT-X-STREAM-INF:BANDWIDTH=8743534,AVERAGE-BANDWIDTH=8743534,CODECS="avc1.640028,mp4a.40.2",RESOLUTION=1920x1080,FRAME-RATE=29.970

1080p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=5177606,AVERAGE-BANDWIDTH=5177606,CODECS="avc1.4d401f,mp4a.40.5",RESOLUTION=1280x720,FRAME-RATE=29.970

720p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=3664591,AVERAGE-BANDWIDTH=3664591,CODECS="avc1.4d401f,mp4a.40.5",RESOLUTION=960x540,FRAME-RATE=29.970

540p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1345110,AVERAGE-BANDWIDTH=1345110,CODECS="avc1.4d401f,mp4a.40.5",RESOLUTION=640x360,FRAME-RATE=29.970

360p.m3u8

In this case, EXT-X-STREAM-INF provides information about each stream, its bandwidth (BANDWIDTH), the used codecs (CODECS), resolution (RESOLUTION), and framerate (FRAME-RATE), as well as optional information about encryption (HDCP-LEVEL). This information is then used to determine the appropriate stream for playback. A primary playlist is only checked once though. Once the variations are evaluated, it is assumed that the data is not changing.

Ads in HLS Playlist files

It is also possible to add advertising - or any other inserted content, e.g. for branding - in HLS playlist files. It is added by using a EXT-X-DISCONTINUITY tag that informs the player that a change in streaming media is about to happen. As an example, this playlist plays the media segments after playing a 16-second ad as a preroll:

#EXTM3U

#EXT-X-TARGETDURATION:10

#EXT-X-VERSION:4

#EXT-X-MEDIA-SEQUENCE:0

#EXTINF:10.0,

preroll0.ts

#EXTINF:8.0,

preroll1.ts

#EXT-X-DISCONTINUITY

#EXTINF:10.0,

fileSequence1.ts

#EXTINF:10.0,

fileSequence2.tsCreating HLS playlist and media segment files

An important part of providing access to media content with HLS is the creation of the playlist manifest and media segment files. Apple provides HTTP Live Streaming Tools that come with command line tools such as a mediafilesegmenter that divides stream input from .mov, .mp4, .m4v, m4a or .mp3 into media segments. The tool will output a .m3u8 playlist file and a number of .ts media segment files. It also supports encrypting the media segments for video-on-demand streams. Then, there is the mediastreamsegmenter that creates a media segment stream for live streaming based on a MPEG-2 transport stream over UDP. With the tsrecompressor the media files can be transcoded into different streams at different bitrates.

Other convenient ways for file conversion are provided by various desktop applications, including HandBrake or Apple's Compressor App which is part of the Final Cut Pro Bundle and can also be purchased as a stand alone app. It is easily the most sophisticated media encoding app I have ever used and supports HDR, HEVC, 360° video or MXF output as well as Camera Log Conversion. Still, also the open-source and free options do the job.

For cloud-based toolchains, AWS Elemental Media Convert comes to mind. The powerful service can transcode any media files into video-on-demand media segments and has full support for HLS options. The tool can also be automated to a large degree and allows input and output files to be directly stored on AWS S3 and media to be delivered over AWS CloudFront as a powerful, scalable, and performative content delivery network.

Some more information about an automated architecture for media streaming using AWS can be found in the article Developing A Media Streaming App Using SwiftUI In 7 Days.

Getting started with HLS Streaming in your Apps

If you are curious and want to incorporate HTTP Live Streaming (HLS) inside your next app project, there are some more articles on Create with Swift to get you started:

- HLS Streaming with AVKit and SwiftUI

- Switching between HLS streams with AVKit

- Using HTTP Live Streaming (HLS) for live and on‐demand audio and video

Additionally, Marco Falanga - who collaborated on the development of the Alles Neu Land App - also created a Github repository with sample code to illustrate the VideoPlayer implementation in SwiftUI and how to monitor the playback buffer to switch to lower resolution streams based on network conditions. For this, a simple view model is presented that is making use of Combine and Observers to continuously check the playback buffers to adjust the stream bandwidth.

This article is part of a series of articles derived from the presentation "How to create a media streaming app with SwiftUI and AWS" presented at the NSSpain 2021 conference on November 18-19, 2021.