The power of ethics in mobile design

Mobile design is intimate. They are tools people hold in their hands and check hundreds of times a day. That’s why mobile developers hold a quiet kind of power: we don’t just make interfaces, we shape behavior, expectations, and trust –whether we mean to or not.

As technology evolves faster than we can follow, the question is no longer whether we can design something delightful, but whether we should. Should the app collect user data? Should it ask for permission or simply act? Should it nudge, or pause? Emerging technologies –from large language models to neural chips– are deepening this relationship, blurring the boundary between user and system.

Design ethics isn’t a constraint on creativity; it’s the container that gives creativity meaning. It guides developers to build experiences that respect attention, protect privacy, and reflect the diversity of human life. This is how intention becomes visible. Values like clarity, accessibility, and delight aren’t ornamental ideals; they determine not only what our apps do, but how they make people feel.

Design teaches people what to expect, what to trust, and what to want next. It shapes personal routines, communication patterns –even identity. Yet apps often overcomplicate simple moments, replacing understanding with friction and delight with noise. Simplicity, when grounded in empathy, is not a limitation; it is a strength.

Nietzsche, Foucault, and Derrida are philosophers who challenged the idea of universal morality, arguing that ethical systems are shaped by culture, power, and interpretation. With this perspective, ethics is not a fixed dogma but a living conversation that needs to evolve with cultural values and technologies. What counts as “good design” reflects not timeless truth, but the shifting morals of an era.

Design less around what you create and more around for whom. As interfaces grow more adaptive and conversational, the very definition of “user interface design” begins to dissolve, being replaced by fluid experiences that merge cognition, language, and computation.

Ethical design helps navigate responsibility with intention. It shapes products that delight without manipulation, include without assumption, and empower without exploitation.

From Growth to Good

One of the most challenging aspects of digital product development is hitting a balance between growth and quality. Growth measures scale and good measures impact. Growth dominates the dashboards, while Good rarely appears in the metrics. Ethical design recognizes the difference and chooses consciously between them.

Technological waves –from the steam engine to AI– have expanded what humans can do. They’ve also raised new questions about fairness, agency, and harm. Algorithms inherit human assumptions and bias, amplifying them at scale. Algorithms are not neutral; they are opinions embedded in math and lines of code.

Our mission is to align technology with humanity’s best interests –to respect attention, improve well-being, and strengthen communities.

— Center for Humane Technology

A UI pattern is not merely a layout; it’s a script for behavior. Defuturing –a term coined by Tony Fry– describes how design can unintentionally undermine the futures it aims to improve. Every interaction has a cognitive, emotional, and environmental cost.

Wearables make this even more personal. Consider the Apple Watch, often described as the most intimate companion. It’s always on the wrist, collecting data all day and night. Ethical design asks: how should we use this intimacy responsibly?

Regulation can respond, but it cannot anticipate. The responsibility for foresight rests with the creators.

Practicing Ethical Mobile Design

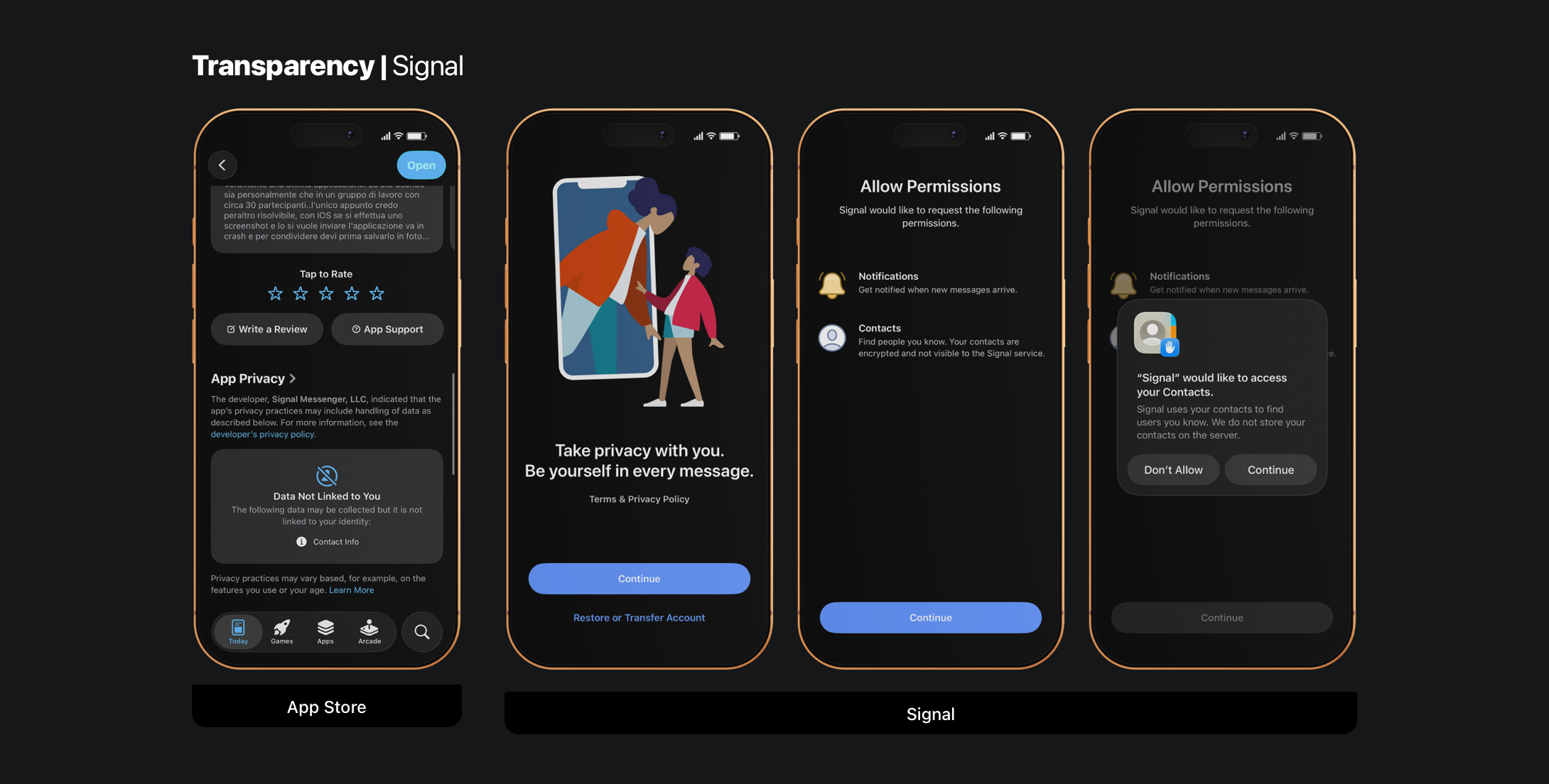

Transparency & Trust

Every app relationship begins with a moment of trust: a permission request, a request for data. Ethical design makes these choices visible, understandable, and reversible. It explains why something is needed and never penalizes someone for saying no.

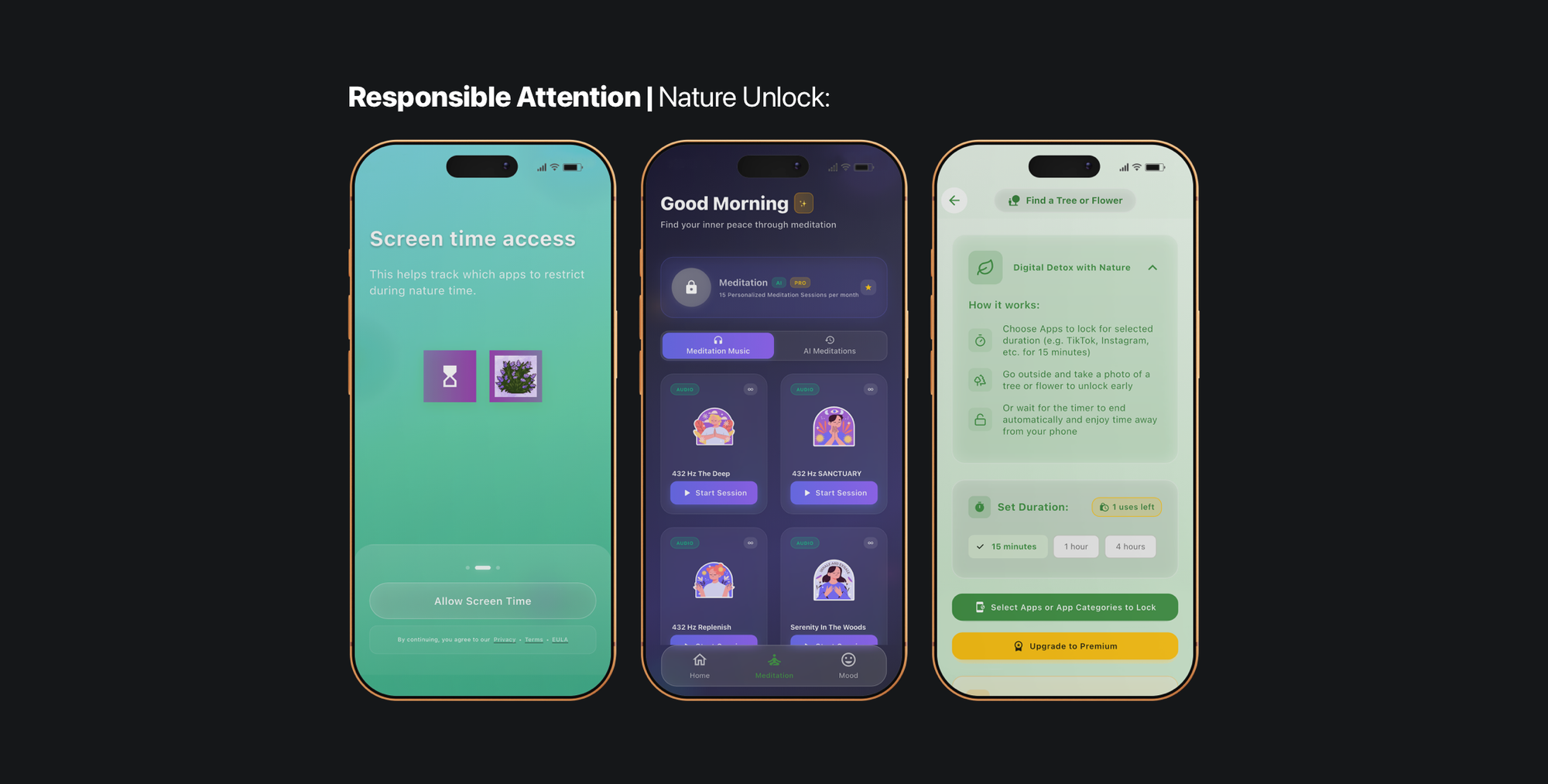

Responsible Attention

Notifications, infinite feeds, and reward loops compete for human focus. Ethical apps keep a presence instead of dependency. Technology should help people stay mindful of their time, energy.

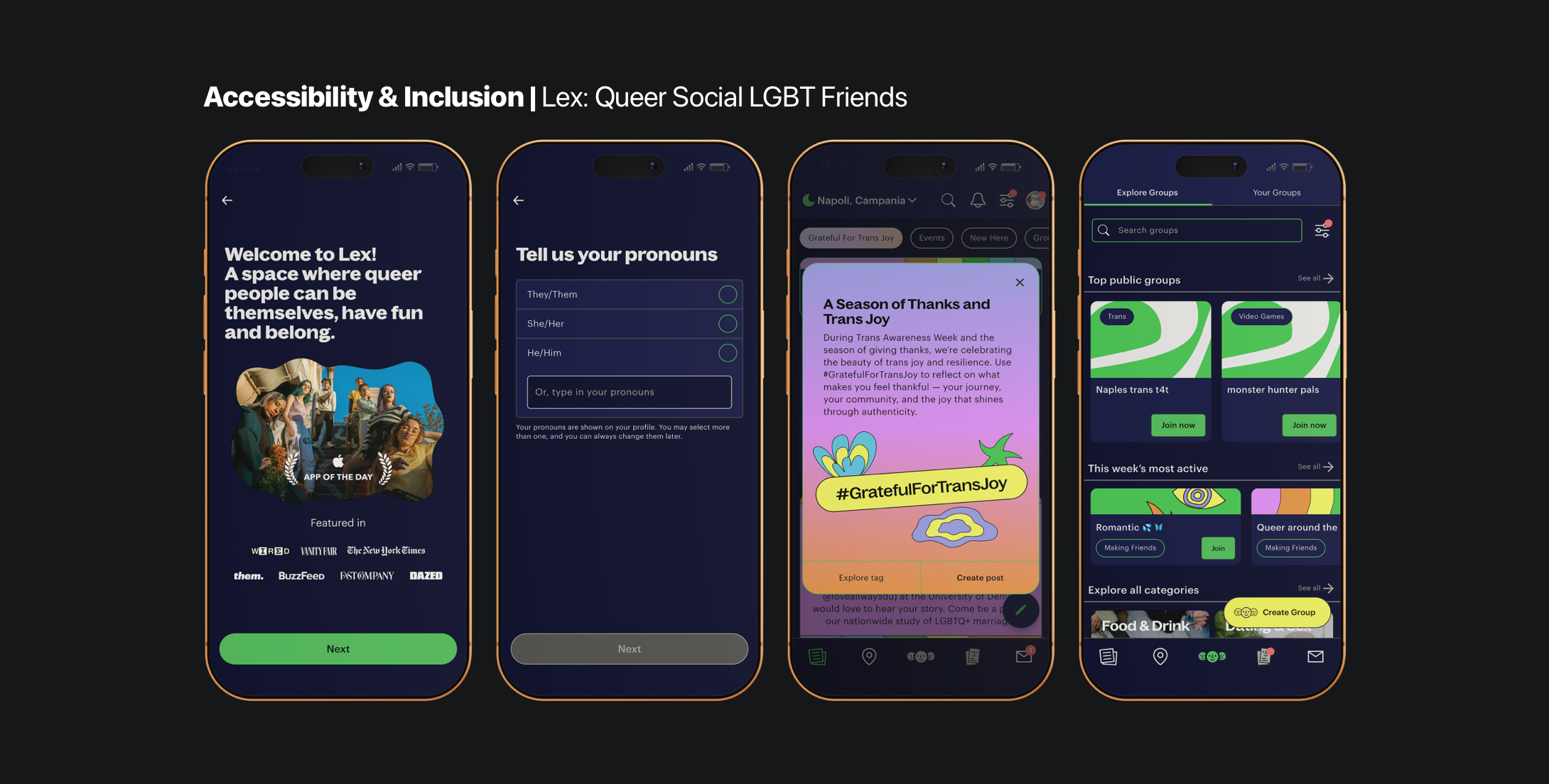

Accessibility & Inclusion

Accessibility isn’t an afterthought; it’s empathy expressed through craft. It’s what allows everyone to participate in the experience you’re building.

Apple offers one of the strongest accessibility frameworks in the industry, with tools like VoiceOver, Dynamic Type, color contrast, and haptics. SwiftUI weaves accessibility directly into the structure of the interface:

Text("Welcome back!")

.font(.title)

.accessibilityLabel("Welcome back, ready to continue?")

But accessibility is only one half of the equation; inclusion completes it.

The words we choose, the pronouns we use, and the illustrations we show all communicate who belongs. Inclusive design considers the diversity of names, identities, bodies, cultures, and languages. It adapts copy and visuals so people can see themselves reflected in the product.

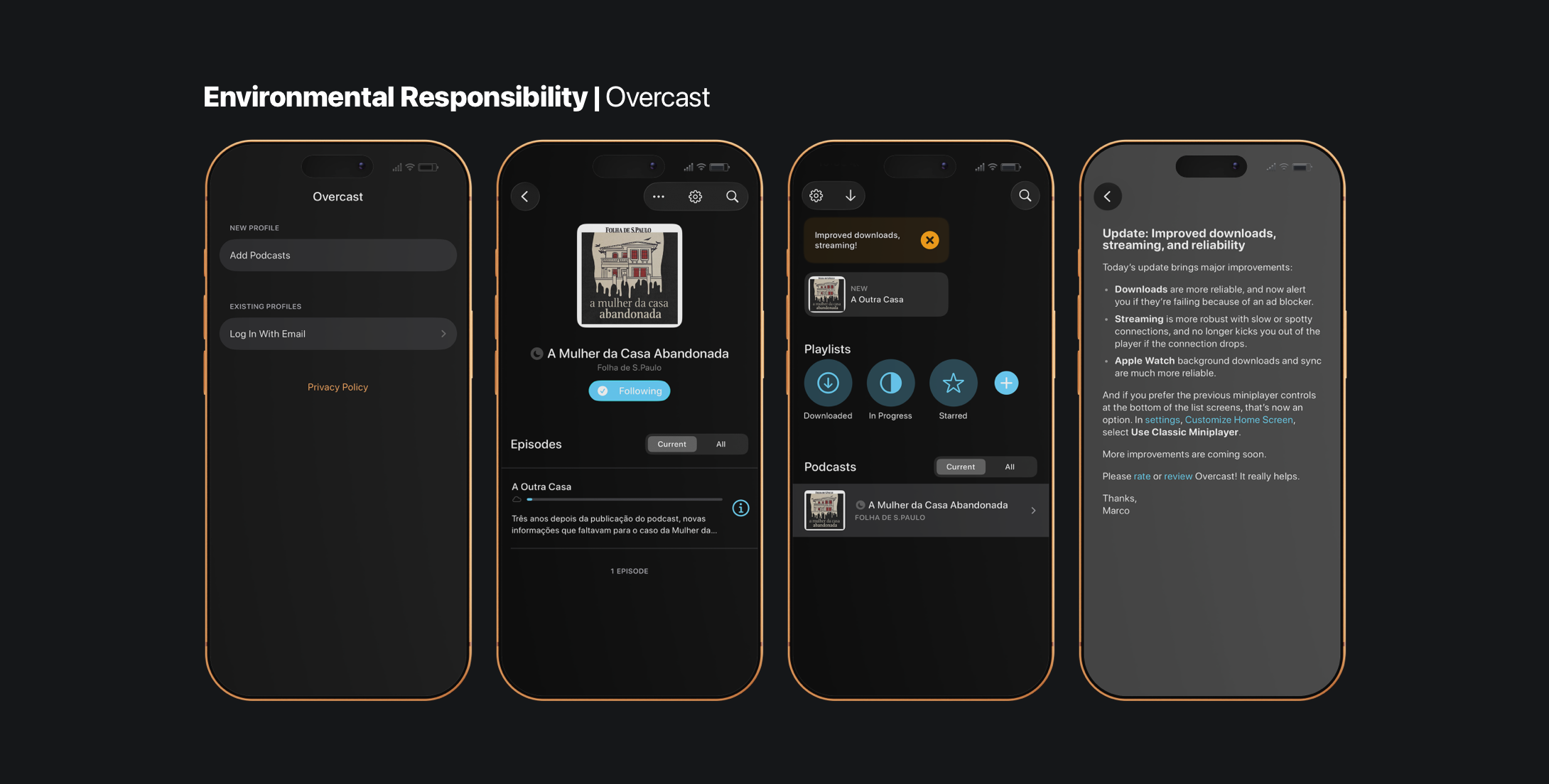

Environmental Responsibility

Digital experiences consume physical resources. Every animation, background fetch, and network request affects batteries, network infrastructure, and thermal output. Optimising for efficiency isn’t just about making apps feel faster; it's about reducing waste across the entire chain of digital operations. Sustainable code respects the environment, the infrastructure that powers it, and the people who pay for its use through battery life, data plans, and device wear.

Environmental responsibility in software isn’t only about carbon emissions. It also means designing with awareness of communities and social impact. It’s treating the ecosystem around an app as a non-human persona with its own needs and limits. Efficient apps reduce server strain on shared infrastructure, minimise digital waste, and avoid patterns that encourage unnecessary consumption.

Responsible software supports healthier habits, sustainable digital ecosystems, the shared resources we all depend on, and reducing carbon footprint.

Co-Creating with AI

GenAI intensifies every ethical question in mobile design. Machine learning models inherit the biases of their training data, shaped by history, culture, prejudices, and inequalities. These biases surface in subtle ways inside the products people use every day, shaping how someone sees, learn, or trusts.

AI is a magnifying mirror of our society. — Anne Bioulac, Women in Africa Initiative

Ethical designers treat datasets like materials: they audit, curate, and refine them to preserve integrity and accuracy. Philosopher Shannon Vallor calls this cultivating technomoral virtues –modern habits like honesty, justice, and empathy that help us navigate emerging technologies.

The rise of AI is being used as an excuse to not talk about DEI and community-centred projects.

— Mitzi Okou, co-founder of WATBD

Applying AI as a co-creator or embedding it as a feature shifts the questions from technical to ethical. Developers should understand the encoded values the model uses and who benefits from them. Who is being left out? What stories does it amplify, or silence? These choices –model selection, training data, system integration– are design choices with real human impact.

In Europe, these concerns shaped one of the most comprehensive regulatory frameworks. The European Commission underlines that AI must remain human-centric, trustworthy, and sustainable. Especially in the public sector, where modernisation efforts must be paired with inclusion, transparency, and environmental responsibility.

The AI Act is a wider package of policy measures designed to support trustworthy, rights-respecting, and socially aligned AI while still encouraging innovation. The Commission’s AI Excellence strategy links AI advancement to the European Green Deal, adding the values of sustainability, security, and inclusion. and identifies public-sector modernisation as a high-impact area. The 2021 Coordinated Plan on AI reinforces these commitments, centring human-centric, sustainable, and inclusivity as non-negotiable principles.

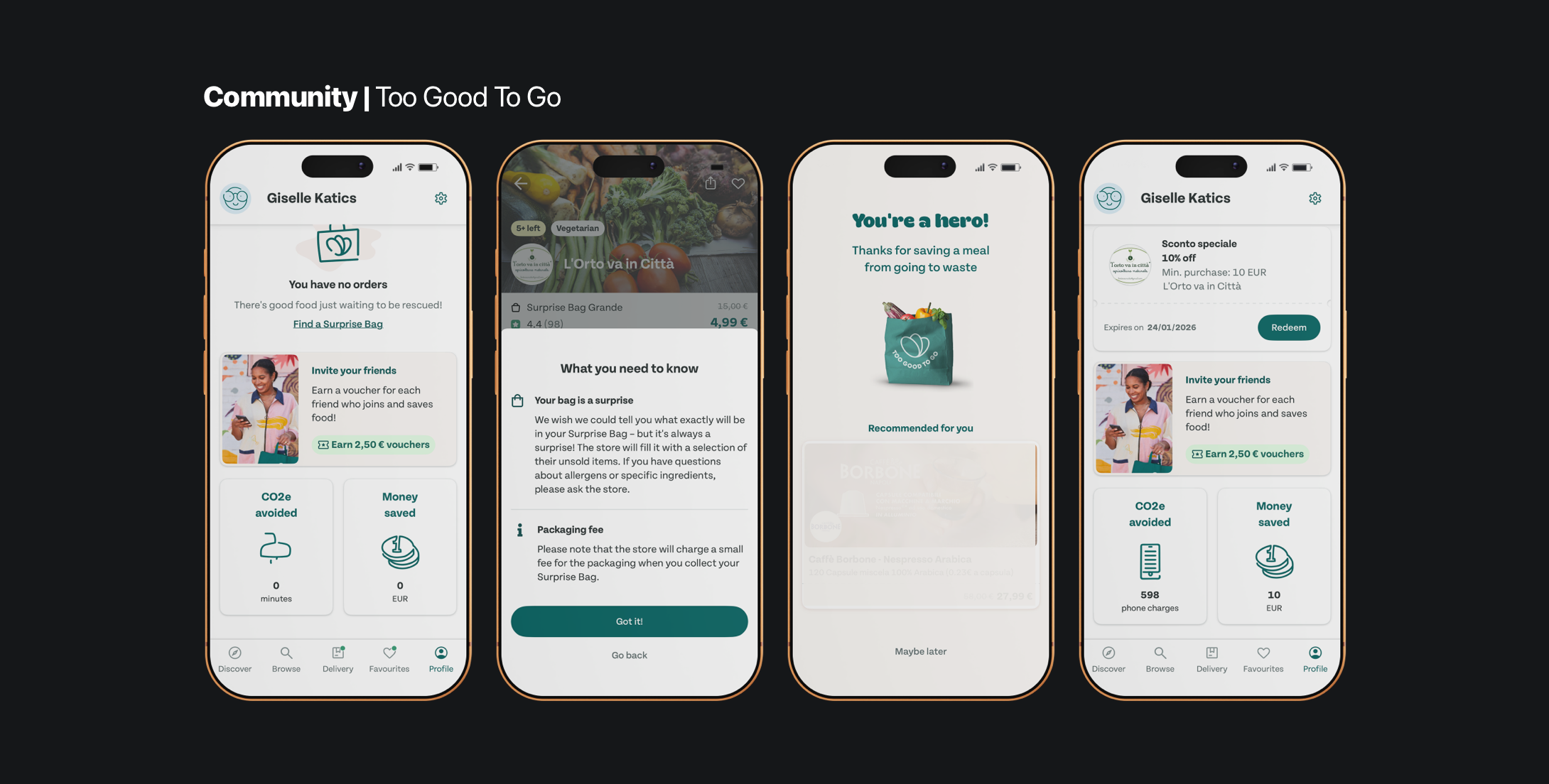

Community and Non-Human Personas

Ethical design expands beyond individual users. It considers communities, systems, and the planet. Products should treat the community and environment as non-human personas, acknowledging that every product exists in larger ecosystems.

The concept of the Pluriverse inspires developers to move beyond the “universal user”. It recognises a world made up of many worlds: cultural, linguistic, and sensory. Designing for difference, instead of erasing it, leads to experiences that feel local, inclusive, and human. Not to homogenise or flatten. But to honour difference.

The Future Is Ethical by Design

Design shapes how people communicate, how they focus, and what they trust. Design helps people make meaning –and meaning requires clarity and courage. Its influence reaches far beyond the screen.

There’s a misconception that simplicity limits creativity –that more features and more motion equal more value. But clarity is one of the highest forms of accessibility. Direct, honest communication invites people in. Ethical design isn’t about constraining imagination; it’s about directing it toward outcomes that are human-centred, sustainable, and future-friendly.

The future of mobile design won’t be defined by faster chips, smarter models, or richer animations. It will be defined by how thoughtfully they are used. Ethical design aligns technical possibility with human dignity. It asks us to build with intention, question standards, and design for the world we want to live in, not just the world that is technologically convenient.

The apps we create today become the habits, memories, and tools of tomorrow. When we design with clarity, empathy, and responsibility, we build more than products. We build futures worth inheriting.