Using an Object Detection Machine Learning Model in an iOS App

By the of of this tutorial you will be able to use an object detection Core ML model in an iOS App with the Vision framework

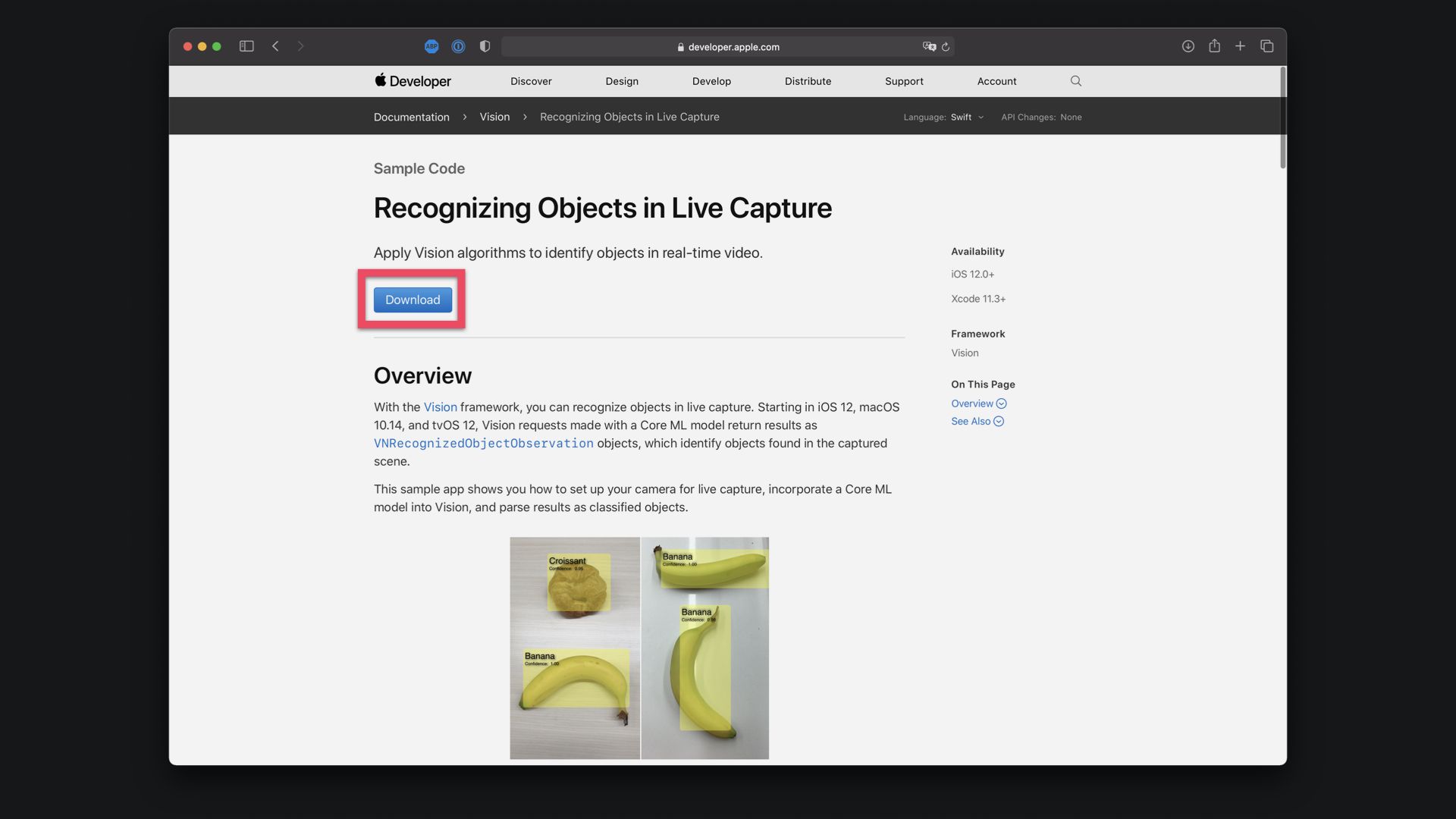

For using Core ML models with the Vision framework in an iOS app, Apple provides a number of sample projects in the official developer documentation. For this short example, I am using a boilerplate Xcode project that Apple provides on their Website to recognize objects in live capture, so in live video feed. There are other sample code projects to detect objects in still images, classifying images for categorization and search or tracking multiple objects in video.

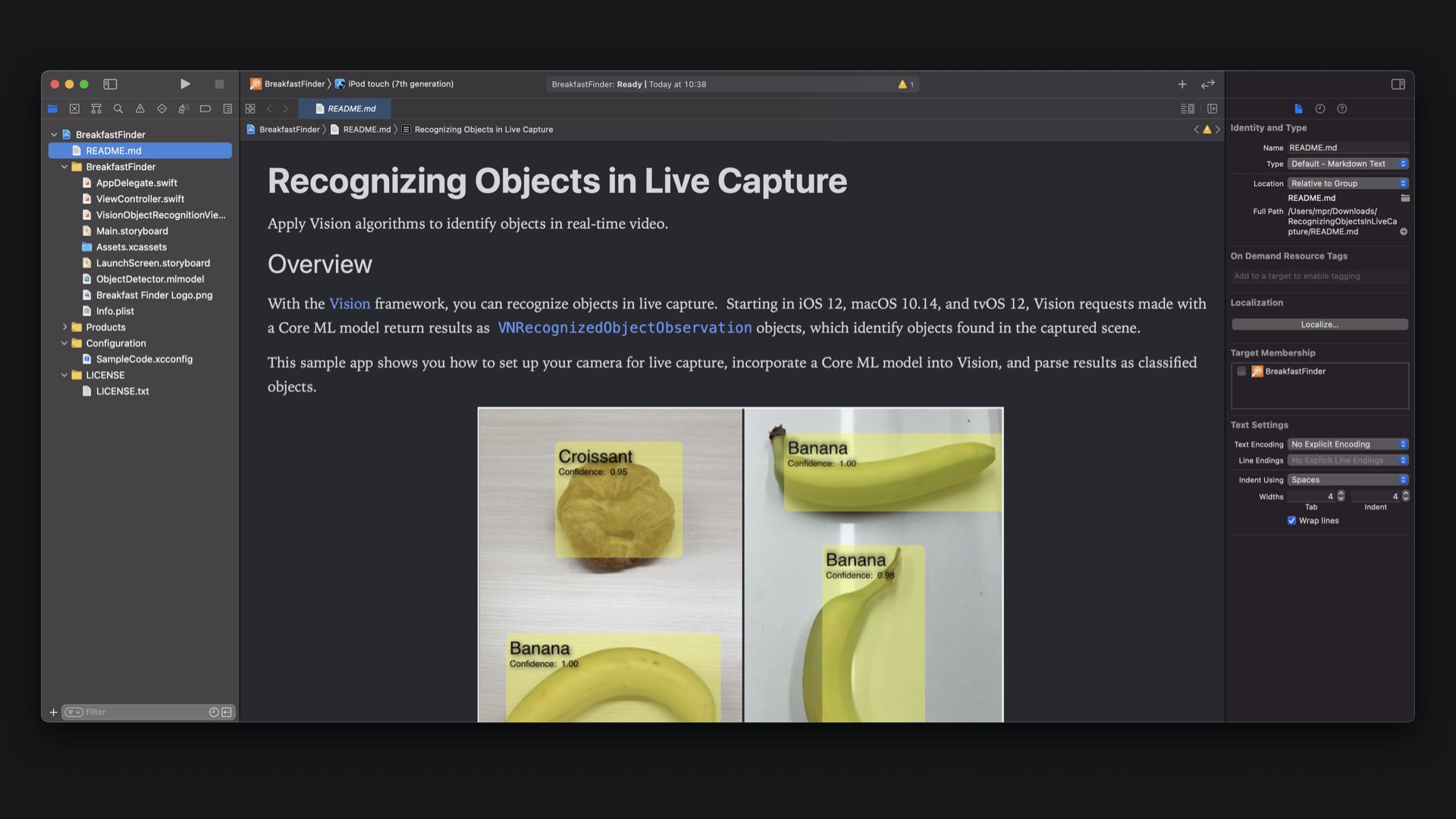

Once downloaded you can open the Xcode project, compile and run the app and will immediately work with the pre-installed ObjectDetector model that is trained to identify 6 breakfast foods.

Now, let's have a closer look at how the sample project works.

The boilerplate project is using a simple ViewController that will present the camera view and annotate boxes and labels over the video feed for any object it detects. In the VisionObjectRecognitionViewController.swift file you can see the implementation with the Vision framework.

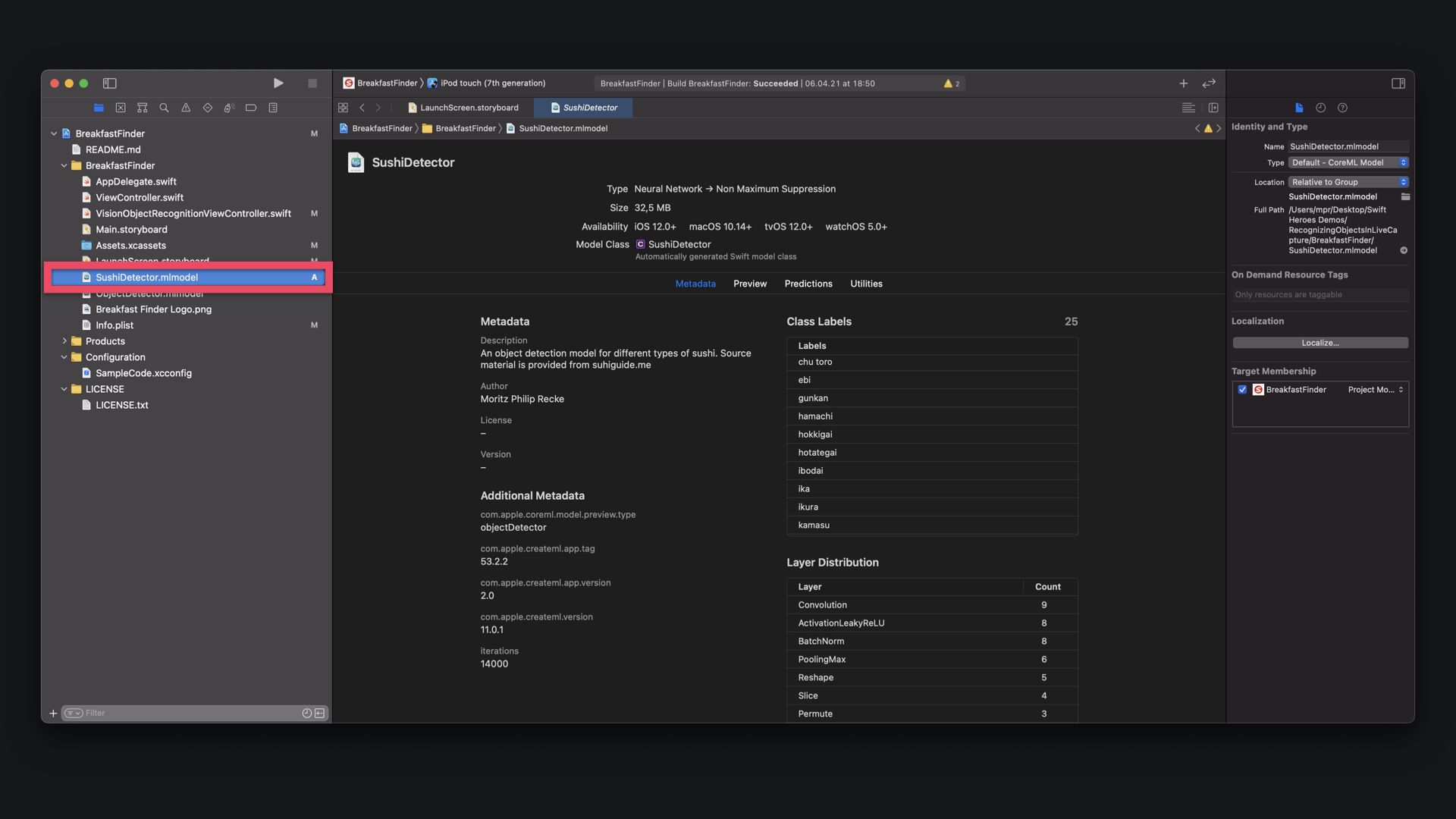

To add your own custom model to the project model, you just have to drag and drop the Core ML model file inside the project. Make sure, to enable "Copy items if needed" to copy the model file inside the project folder.

I am using a SushiDetector model in this example, a custom object detection model created to detect different types of sushi. If you want to know more about creating your own custom object detection machine learning model with Create ML, check out our step by step tutorial.

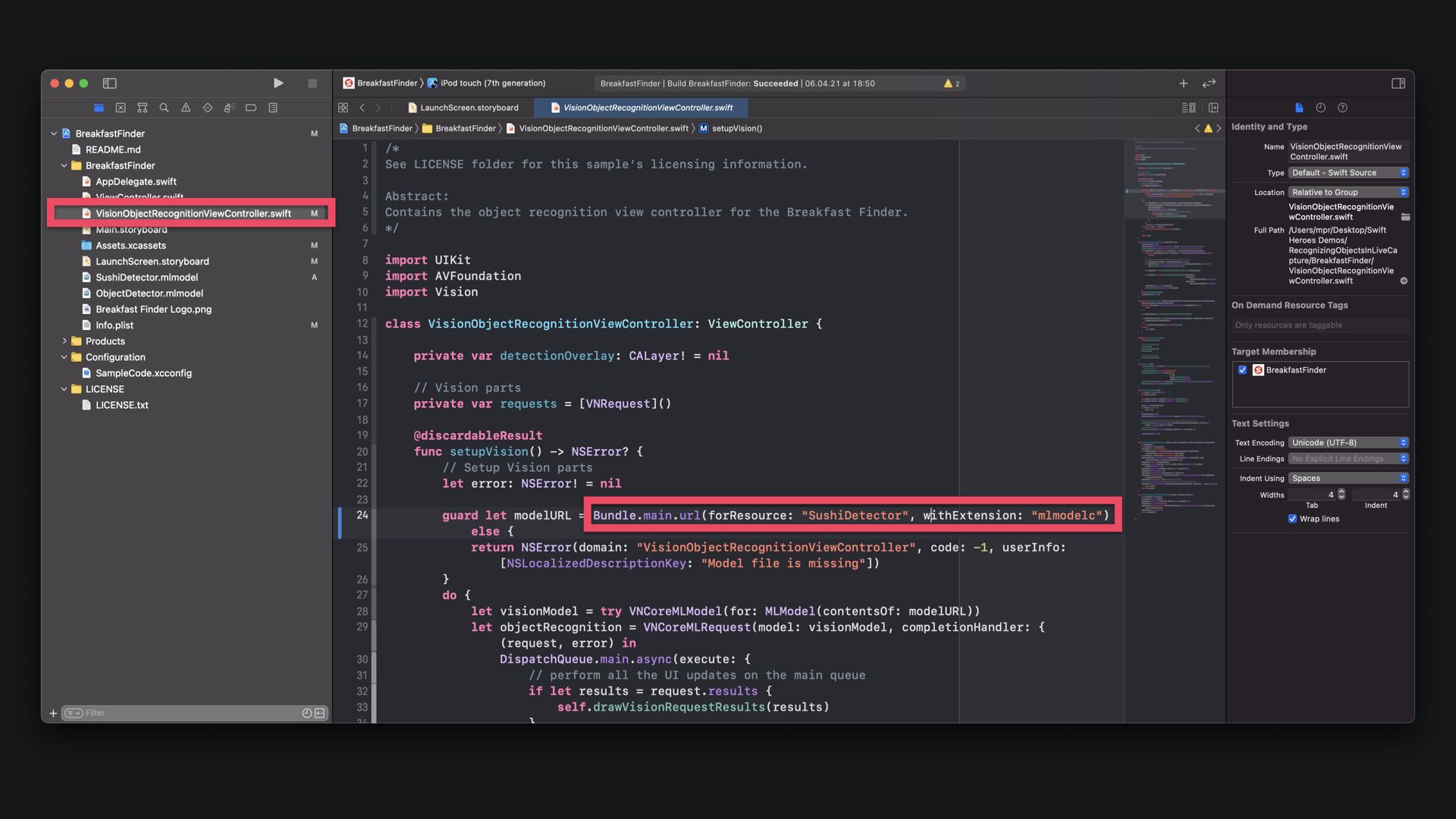

In the VisionObjectRecognitionViewController.swift file you just have to specify the model name within the setupVision() function, everything else done for you.

func setupVision() -> NSError? {

[...]

guard let modelURL = Bundle.main.url(forResource: "YOURMODELNAME", withExtension: "mlmodelc") else {

return NSError(domain: "VisionObjectRecognitionViewController", code: -1, userInfo: [NSLocalizedDescriptionKey: "Model file is missing"])

}

[...]

return error

}To test the app, connect your iPhone and install the app on the device. As you can see, in my example, it correctly detects some sushi dishes as I hold the camera close to my lunch bento box.

This tutorial is part of a series of articles derived from the presentation Creating Machine Learning Models with Create ML presented as a one time event at the Swift Heroes 2021 Digital Conference on April 16th, 2021.

Where to go next?

If you are interested into knowing more about using machine learning models or Core ML in general you can check other tutorials on:

- Core ML Explained: Apple's Machine Learning Framework

- Create ML Explained: Apple's Toolchain to Build and Train Machine Learning Models

- Use an Image Classification Machine Learning Model in an iOS App with SwiftUI

- Use an Object Detection Machine Learning Model in Swift Playgrounds

- Use an Image Classification Machine Learning Model in Swift Playgrounds

Recommended Content provided by Apple

For a deeper dive on the topic of creating object detection machine learning models, you can watch the videos released by Apple on WWDC:

- WWDC 2019 - Core ML 3 Framework

- WWDC 2020 - Explore Computer Vision APIs

- WWDC 2020 - Improve Object Detection models in Create ML

Other Resources

If you are interested into knowing more about creating machine learning models with Create ML, you can go through these resources:

- To understand how to create immersive user experiences with machine learning in your app, study the Human Interface Guidelines' dedicated section for Machine Learning.

- To explore the various Core ML models provided by the research community, refer to the Machine Learning Section on Apple's Developer Platform.

- Explore Awesome-CoreML-Models for a large collection of machine learning models in Core ML format, to help iOS, macOS, tvOS, and watchOS developers experiment with machine learning techniques.